I have encountered this issue couple days ago and have no idea what happened. So I asked someone in my team and he told me to resize the VM size of the image and then rebuild the database using our scripts.

Today I was trying to query from MySQL for some data and the connection failed. So I went to the docker desktop to check the logs and found it told me that Disk 0.00 BD available.

So this happened again, laster time I thought it may be due to too many data in my database so I did the db refresh and it worked. But this time only after 2 months or less, here it comes again and even I'm newbie to mysql and I know data should grow that fast though. So I tried to find out the root cause and solutions as well.

At first I googled and the all the answers are showing that it's due to too many unused containers or volumes, so I tried the docker prune which only reclaim less than 1M space so I know this is not the case.(after all, I don't have many images build).

Actually the previous step takes me too much time cause I was trying to figure out the docker command as well as how to clean the system internally(login into the image).

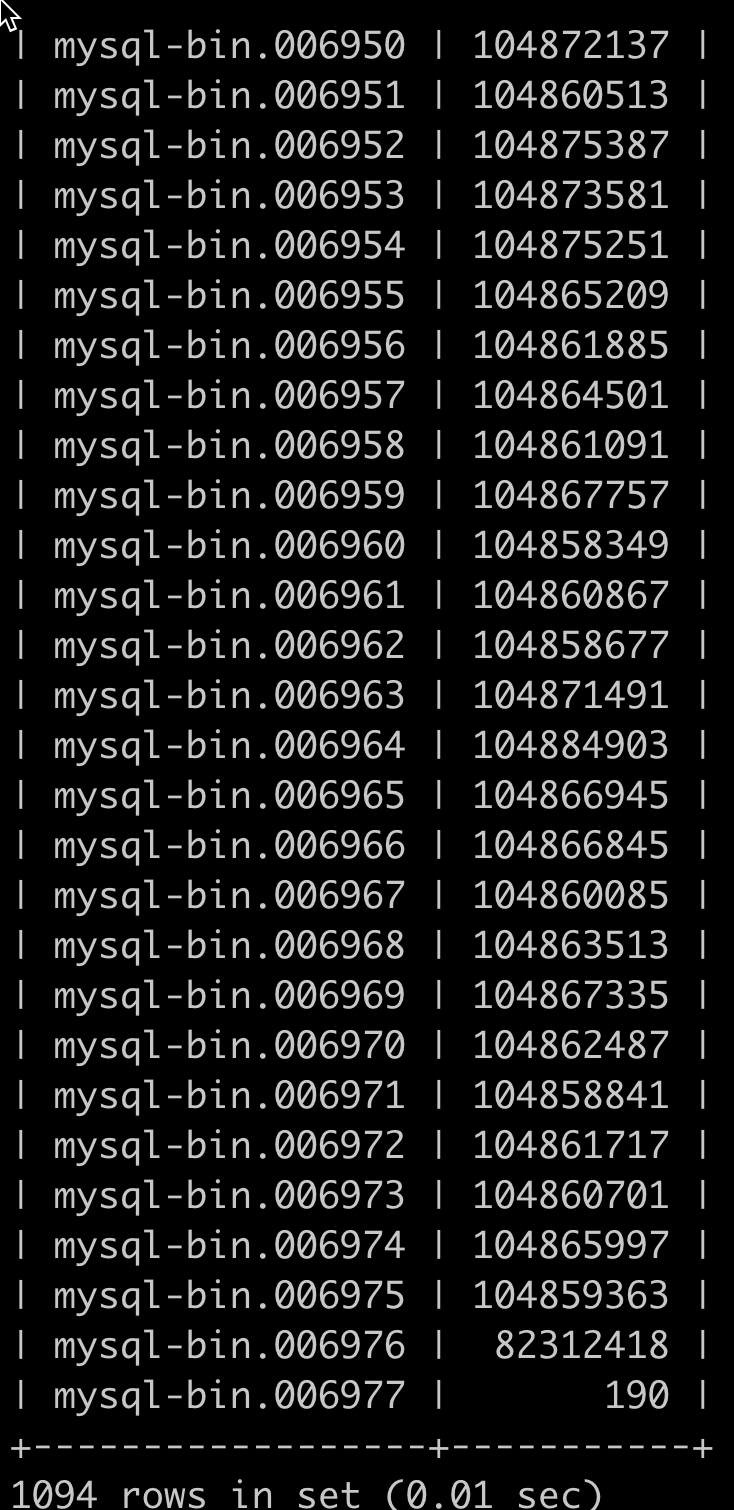

Then I quitted and randomly click the docker desktop and trying find the size adjustment page while, I found the detailed info my MySQL volume, where there are huge amount of binary log, each of them with fixed size. So I turned to try to clean the binlog in the space and I found it worked!(Yeah)

You could delete one by one on docker desktop or you go to the mysql and delete it.

-

First you access the image or the volume:

docker exec -it <YOU_CONTAINER_ID> /bin/sh -

Then login into MySQL; and check binary logs using

show binary logs;:

-

Delete them, I do this because I'm using mysql in my local for debugging. Do NOT do this if you are in PRODUCTION!

-

SQL PURGE MASTER LOGS BEFORE DATE_SUB(CURRENT_DATE, INTERVAL 10 DAY); // delete data of 10 days ago -

sql purge master logs before '2023-04-15 00:00:00'; // or some specific datetime

-

-

And you could also check if

expire_logs_daysis too large in you mysql configuration file. For my docker it's in your mysql directory in the docker directory.

== updates 3 days later===

The docker disk is almost full again!!! So I checked it's again the binlog. So I decide to check what the binlog contents is and see what my MySQL is keeping doing.

So I checked a random binlog almost all logs are processing message. So I also go back to the tomcat console, there are continuous messages shown like:

Caused by: org.apache.activemq.transport.InactivityIOException: Cannot send, channel has already failed: tcp://localhost:61617

So I checked some stackeoverflow answers people said it's because the broker url is not right so the connection keeps failing.

In the meanwhile, I'm working a new enhancement of our product feature, the job is also pended. At first I thought there are some bugs. However, after I took some on the messaging issue time in the middle of the day, I'm wondering if they are some issue. So I checked the log based one this job log tag and did find all the jobs are queued.

I checked the docker, the image has a url and port while not consistent which the previous tcp://localhost:61617, so I did a full search in my repo and substitute all the broker url to this in configuration files.

It works. Perfect.

====

Enjoy!

Reference