How to improve system performance with caching

Duplication cache

Local vs external cache

Adding data to cache(explicitly, implicitly)

Cache data eviction(size-based, time-based, explicit)

Expiration vs refresh

-

Local(private)cache: same machine as the app itself. Google Guava Cache. Simple and fast but not scalable due to the size limit. And zero fault tolerance and durability.

-

External (shared/remote) cache: external storage. Redis , Memecached. Scalable(all servers capacity, and fault tolerant and durable(if support replication)

-

Hash table persists all things at one time until they are explicitly removed. Cache has limit size and evicts entry automatically.

-

Add data into cache :

- Explicitly : put

- Implicitly: when getting data it's not in cache, it will be added to the cache

- Synchronously: the caller waits for the data to be loaded from data store

- Async: the get call returns immediately, the background has a thread to run the refresh job.

-

Cache eviction:

- Size-based : Eviction policies: LRU, LFU

- Time-based : TTL

- Passively: when entry is accessed, if found expired, evicted

- Actively: background thread runs at regular intervals

- Explicitly remove

-

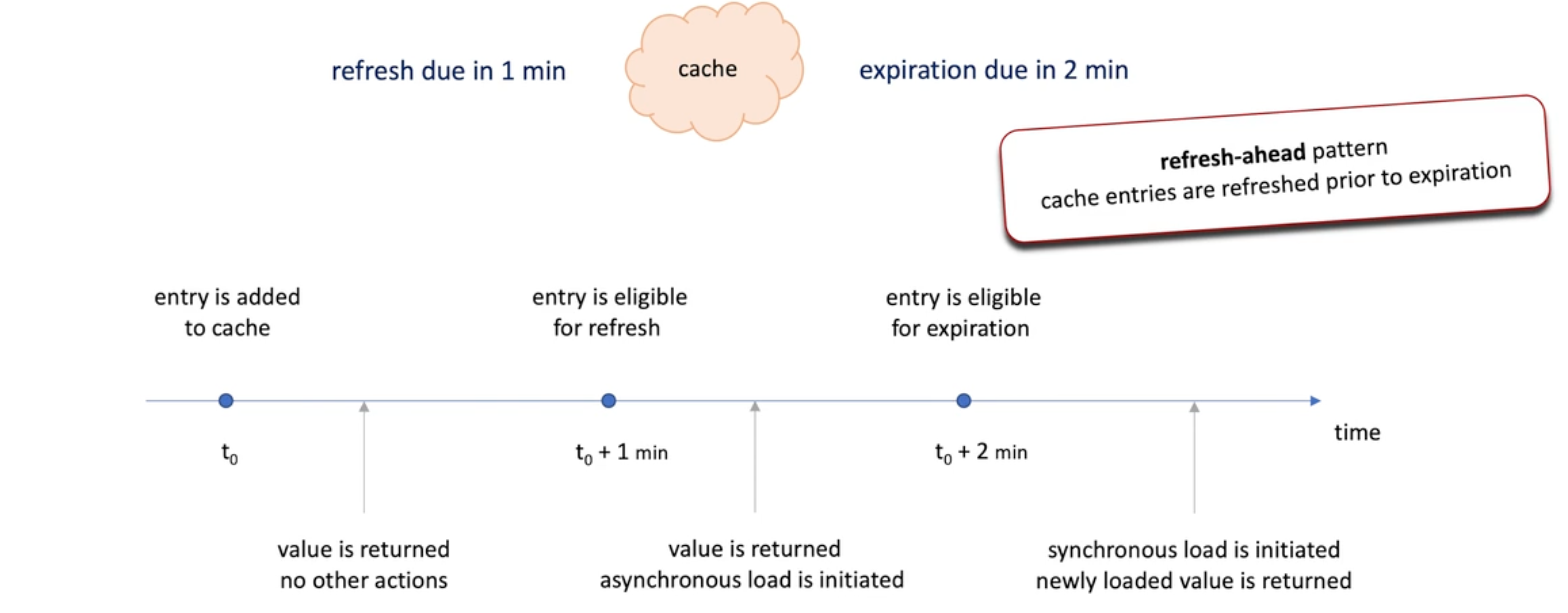

Expiration vs refresh(update without eviction)

- Expiration: sync manner. When an entry expired, app waits for the new entry to be loaded from data store.

- Refresh: existing entry is returned and new value is loaded async in the background.

- Refresh is especially good for hot keys: hot keys are keys that are read very frequently. Refresh won't block the read.

-

A example : a cache, refresh time 1, expiration time 2 min :

-

Return to the messaging system: duplicate messages (how happened->the message is accepted and processed by the broker but the ack is somehow lost, send producer thinks the send failed so to send again the same message)

- There will be a sequence key or duplication id in the metadata. If not provided in the message, broker may generated itself for the message based on something.

- If broker hasn't seen the message(by checking the duplication id), then the message is accepted;

- Otherwise , it's duplicated and it's not accepted.

Metadata cache

Cache-aside pattern

Read-through and write though patterns

Write-behind(write-back) pattern

- Previous problem focus on the cache itself how to deal with data;

- Now we are focusing another typical problem : how to keep the data store and cache their data consistent.

- Who might be in charge of this job(maintain consistency)? - application(use both stores) or cache .

- Application manage: cache aside. -> widely used

- Application looks for a entry in the cache , if there(cache hit), return immediately;

- Else if not there(Cache miss): it will fetch data from the data store;

- Put the new entry into cache.

- Not suitable for latency-critical systems->Every cache miss results in three round trips

- Stale data when data changes frequently in the data store -> cache doesn't know data store change (mitigated by setting expiration time on cache entries)

- Prone to cache stampede behaviors -> multiple threads simultaneously query the same entry from the data store (due to cache miss) -> a problem to large scale system

- Cache in charge -> read and writes go through cache.

- Read through -> cache reads data from data store in case of cache miss. (sync manner)

- Write through -> modification to the cache are written back to the data store. (sync)

- Advantage :

- simplify the data access code of application ;

- help to mitigate the cache stampede problem -> it only allows one request to read data from data store , others are waiting list, once the data is fetched, it send the response to the clients whose request is on the waiting list. -> request coalescing

- Cons:

- Cache may contains al ts of rarely used at ( problem for small caches )

- Cache becomes critical component (if fails, system down)

- The sync manner is more reliable since it waits for the data to come back and if it's not back , some log or exception can be recorded.

- But if the system prioritize performance, there is a async way to maintain the consistency. -> write behind pattern

- The data is write to cache and response to client immediately. The data in cache is queued and write to data store later.

- This improve performance (higher throughput and lower latency ) but may lose data if cache crashes -> can be mitigated by cache replication.

- Messaging system : we store metadata (max size of the queue.... Message retention period...)

- Store in the embedded storage

- Also has a in memory cache for fast data access. -> we need the metadata to validate the message each time the message comes.

- We can implement the cache using read through and write through.